Intro to Kubernetes

If you don’t know much about Kubernetes, this post might be a good place to start. I did my best to summarize some of the key fundamentals on Kubernetes to help you get started with this technology. Let's dive in!

Kubernetes is a software used in a microservices architecture to distribute resources between different services in proportion to the needs of each one. This allows to fine tune resource allocation and thus, helps lower cloud bills.

How does this work?

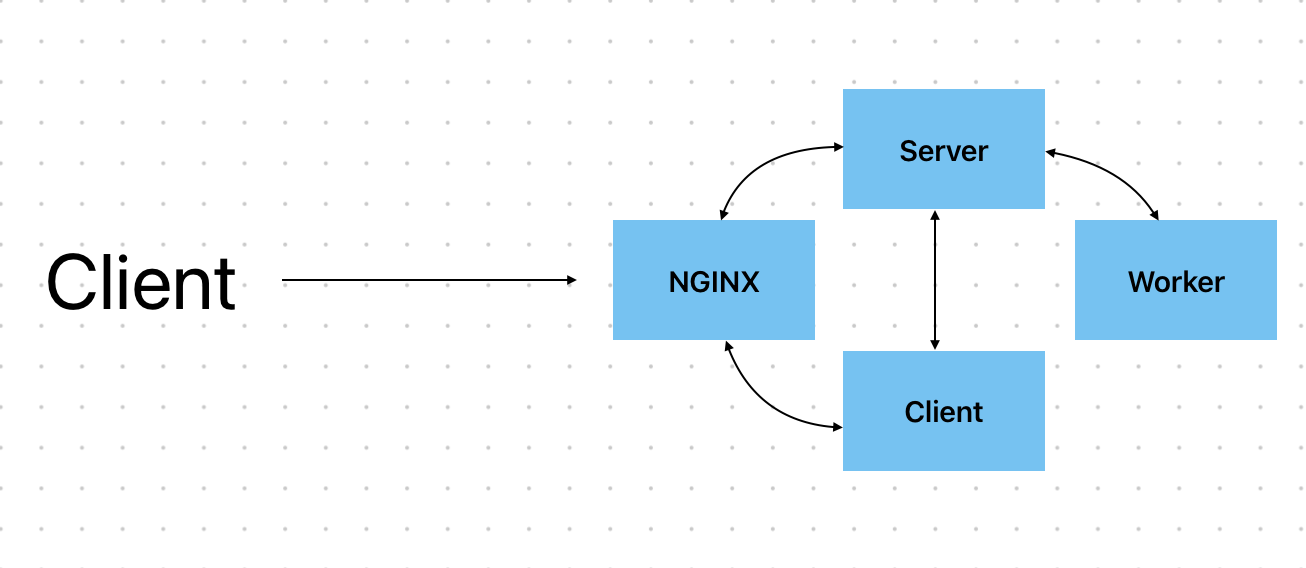

The following diagram shows the architecture of a simple image-editing application composed of an Nginx load balancer, a client, a server, and a worker. In this example, the worker is responsible for converting an image to black and white.

When deploying this application to AWS, Elastic Beanstalk can handle auto-scaling and availability by creating multiple instances of the application to meet the needs of users. However, the problem here is that the worker service clearly uses more resources than the other services. Does it make sense to scale all containers in the same manner?

If it takes 10ms for the server to process a request to edit an image and the worker 200ms to convert an image to B&W, this means that the server could process ~20 requests before the worker is done processing one image.

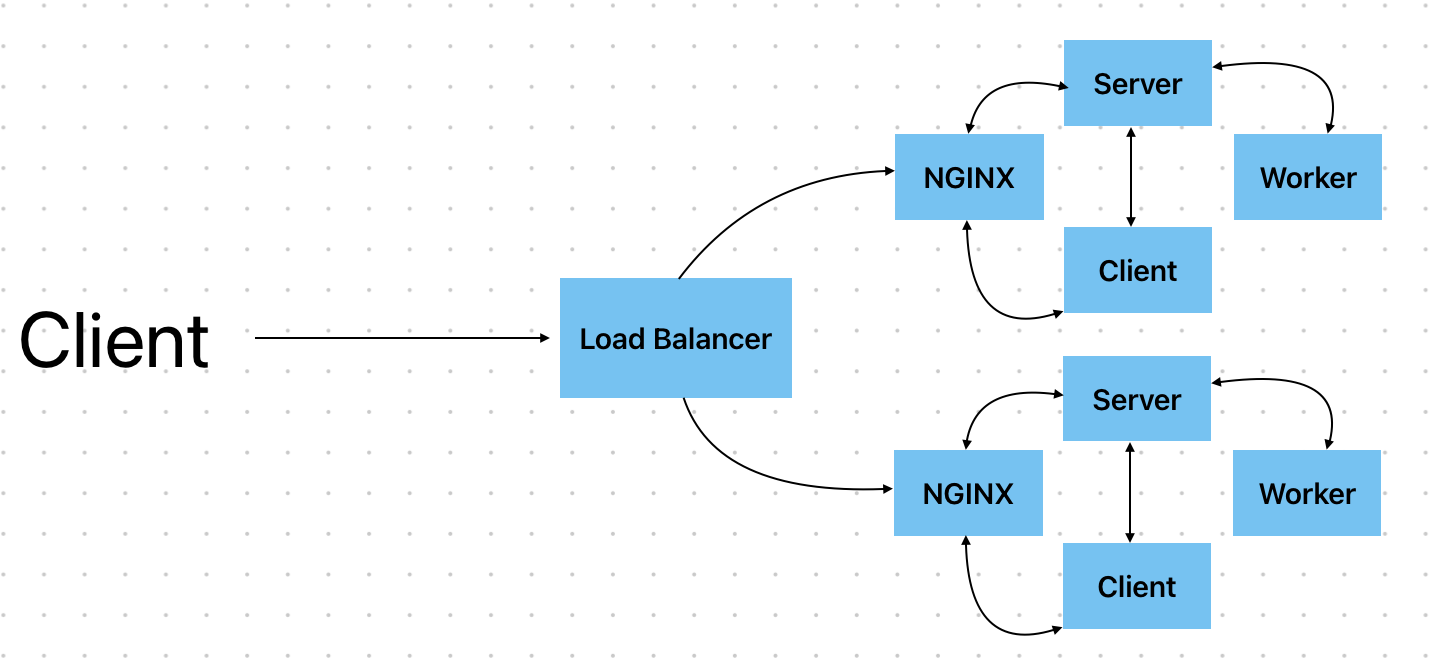

When scaling this application using AWS Elastic Beanstalk, multiple instances of the entire application will be created with a 1:1:1:1 ratio of services. Hence, to accommodate the higher load to the worker, new instances of the client, server, and load balancer must be created, which results in wasted resources and extra cloud costs.

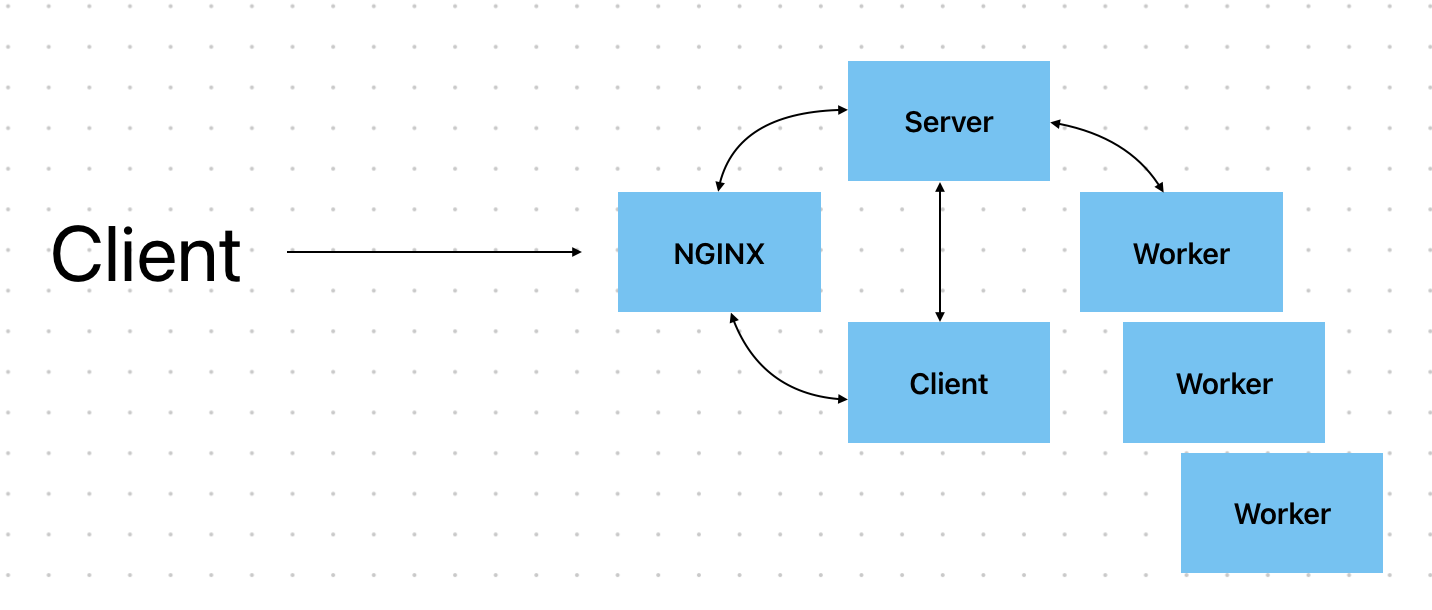

Kubernetes solves this problem by letting developers specify how many instances of each service to run at a given time. This way, they can distribute resources in proportion to the needs of each service.

For instance, one can specify that an application needs to run 20 instances of the worker container and one instance of each of the other containers. FYI, in Kubernetes we group similar containers into pods, so we actually specify the number of instances of each pod (not individual containers).

By creating more instance of the worker, we can ensure that the application runs smoothly and no resources are wasted on superfluous containers.

Now, how do we know how many instances of each pod are needed?

Developers usually start with a manual resource allocation. Then, Kubernetes’ Horizontal Pod Autoscaling or HPA can dynamically adjust the number of pods in a deployment based on metrics like CPU or memory usage. Additionally, tools like Prometheus can monitor performance and help adjust the number of pods or resources allocated.

So, when is Kubernetes needed/not needed?

Kubernetes is a great tool to use when an application contains multiple types of containers that require different amounts of resources like CPU, number of instances etc., and can help decrease cloud cost by optimizing resource usage. Conversely, if an application uses a monolith architecture or is composed of similar types of containers, then the use of Kubernetes is not warranted.